For a full publication list, please see my Google Scholar profile.

Current Projects

RACER: High-Speed Autonomous Off-Road Driving

RACER: High-Speed Autonomous Off-Road Driving

DARPA Robotics Challenge

University of Washington

[Video 1] [Video 2] [Team Website] [Press Release] [Article]

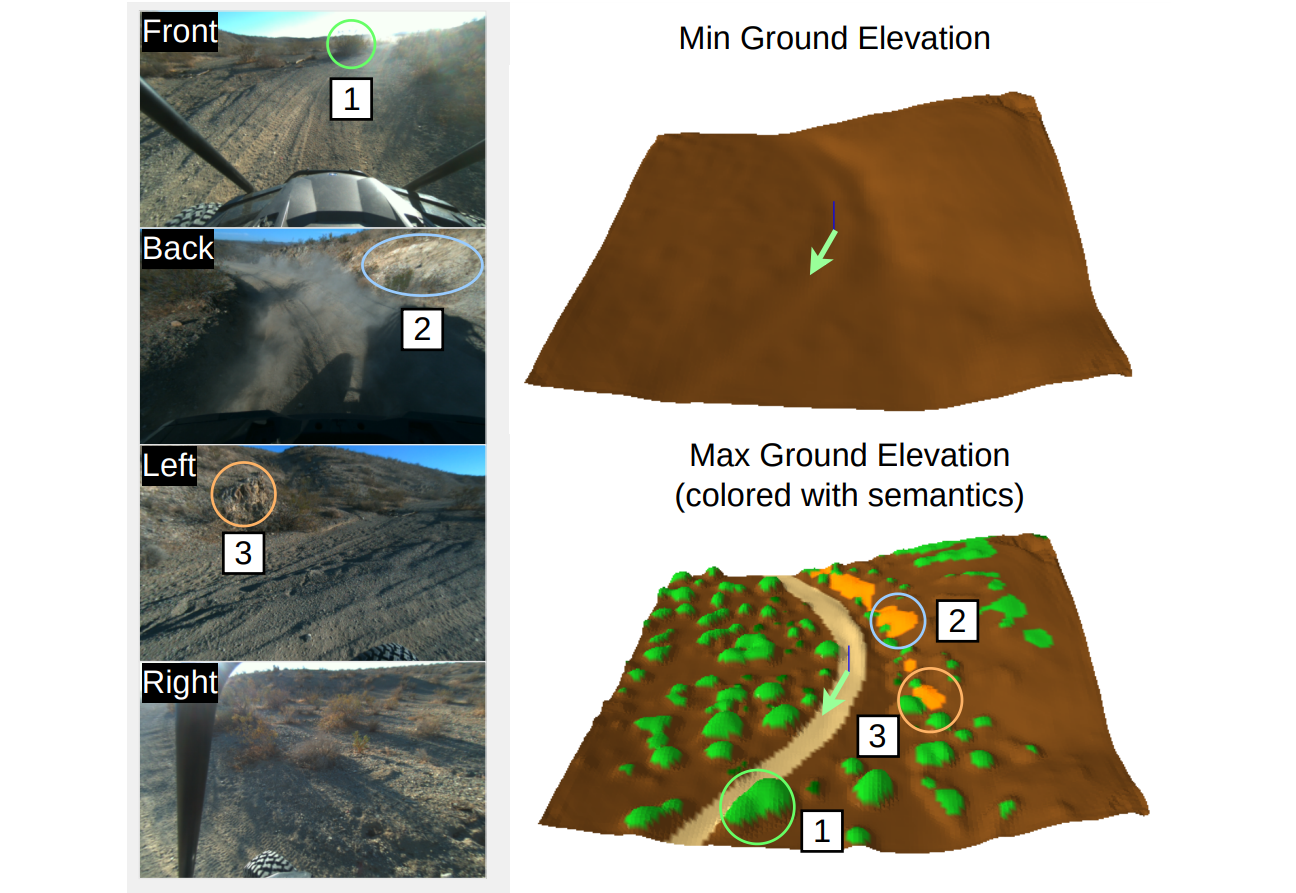

The DARPA-sponsored RACER program (“Robotic Autonomy in Complex Environments with Resiliency”) aims to match human-expert level performance for high-speed driving in unstructured, outdoor environments. The Un-manned Ground Vehicles (UGVs) must operate under a variety of terrain types, such as desert-like and forested environments, and maintain resiliency under various conditions. The competition is conducted using modified Polaris RZR off-road vehicles, outfitted with state-of-the-art computing and sensing capabilities. The University of Washington team was selected as one-of-three in the country to partake in the challenge (others include Carnegie Mellon University and NASA Jet Propulsion Laboratory).

Learning Complex Terrain Maneuvers from Demonstration

Learning Complex Terrain Maneuvers from Demonstration

University of Washington

Off-road autonomous vehicles must maneuver through thick vegetation, forested areas and rough terrain. However, it is difficult to define what is traversible, given such unstructured and variable environments. A planner’s anticipated interaction with local terrain features can be represented by a cost-map, which can be learned from human demonstrations. This is being investigated using an approach combining Inverse Reinforcement Learning (IRL) and semantic feature prediction. Testing and deployment is performed on a Clearpath Warthog, an autonomous amphibious ground robot.

Research Papers

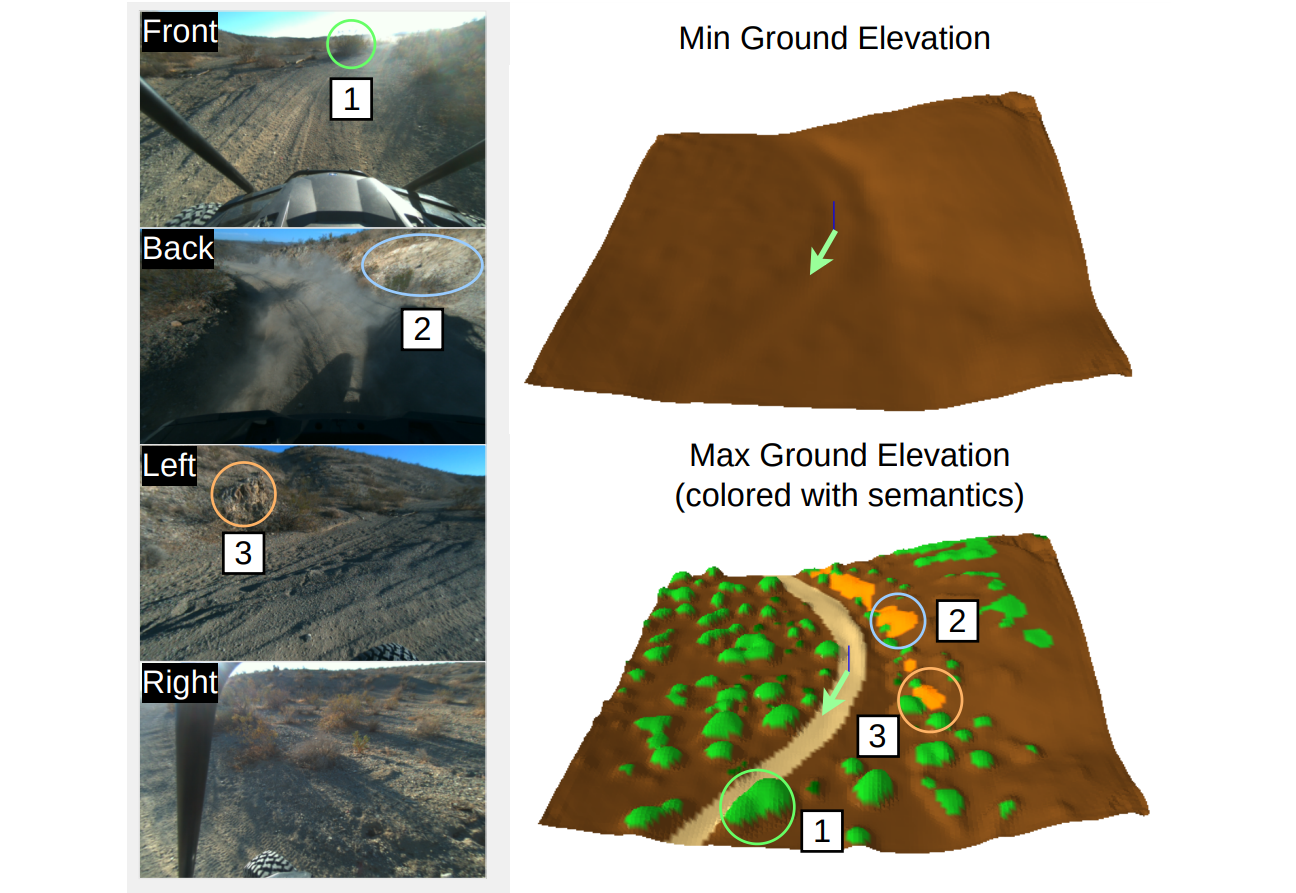

TerrainNet: Visual Modeling of Complex Terrains for High-speed, Off-road Navigation

TerrainNet: Visual Modeling of Complex Terrains for High-speed, Off-road Navigation

Robotics: Science of Systems (RSS), 2023

X. Meng, N. Hatch, A. Lambert, A. Li, N. Wagener, M. Schmittle, J. Lee, W. Yuan, Z. Chen, S. Deng, G. Okopal, D. Fox, B. Boots, A. Shaban

[pdf] [website]

Abstract: Effective use of camera-based vision systems is essential for robust performance in autonomous off-road driving, particularly in the high-speed regime. Despite success in structured, on-road settings, current end-to-end approaches for scene prediction have yet to be successfully adapted for complex outdoor terrain. To this end, we present TerrainNet, a vision-based terrain perception system for semantic and geometric terrain prediction for aggressive, off-road navigation. The approach relies on several key insights and practical considerations for achieving reliable terrain modeling. The network includes a multi-headed output representation to capture fine- and coarse-grained terrain features necessary for estimating traversability. Accurate depth estimation is achieved using self-supervised depth completion with multi-view RGB and stereo inputs. Requirements for real-time performance and fast inference speeds are met using efficient, learned image feature projections. Furthermore, the model is trained on a large-scale, real-world off-road dataset collected across a variety of diverse outdoor environments. We show how TerrainNet can also be used for costmap prediction and provide a detailed framework for integration into a planning module. We demonstrate the performance of TerrainNet through extensive comparison to current state-of-the-art baselines for camera-only scene prediction. Finally, we showcase the effectiveness of integrating TerrainNet within a complete autonomous-driving stack by conducting a real-world vehicle test in a challenging off-road scenario.

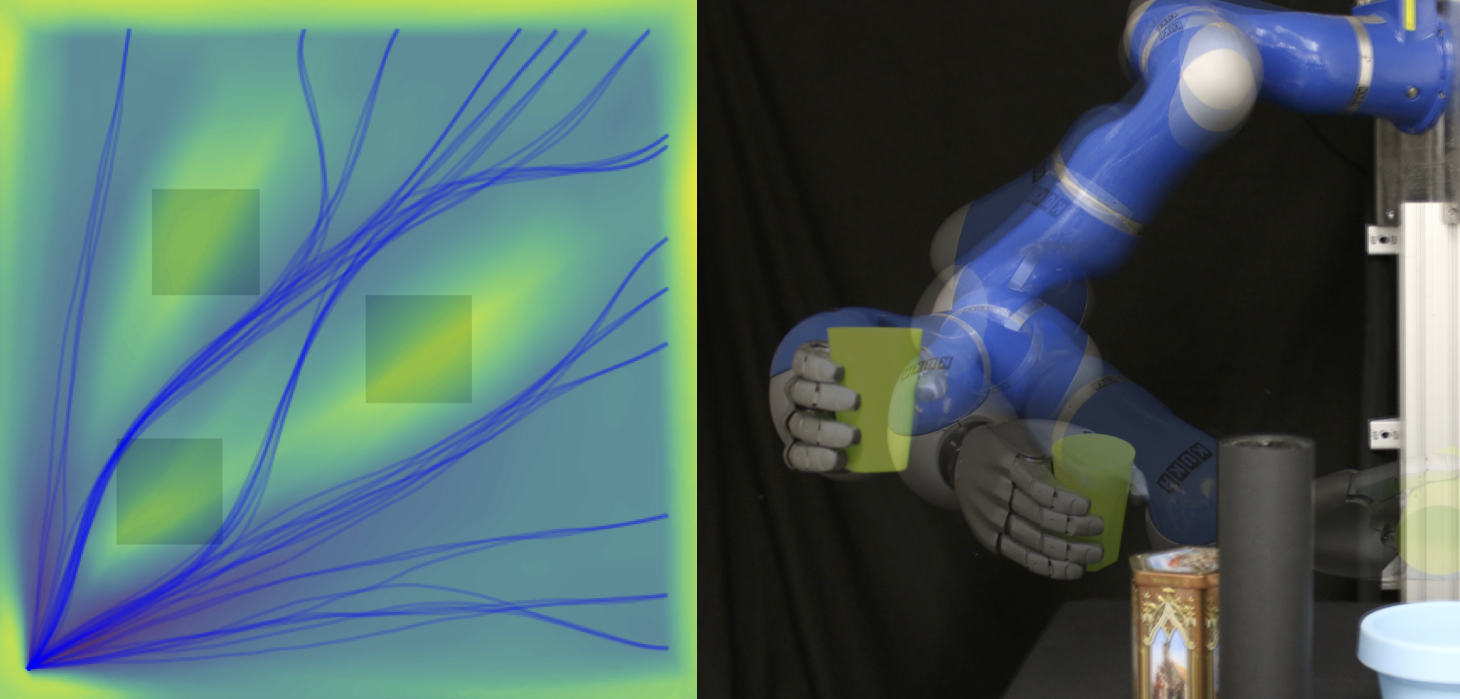

Learning Implicit Priors for Motion Optimization

Learning Implicit Priors for Motion Optimization

IEEE International Conference on Intelligent Robots and Systems (IROS), 2022

A. T. Le*, A. Lambert*, J. Urain*, G. Chalvatzaki, B. Boots, J. Peters

[pdf] [website]

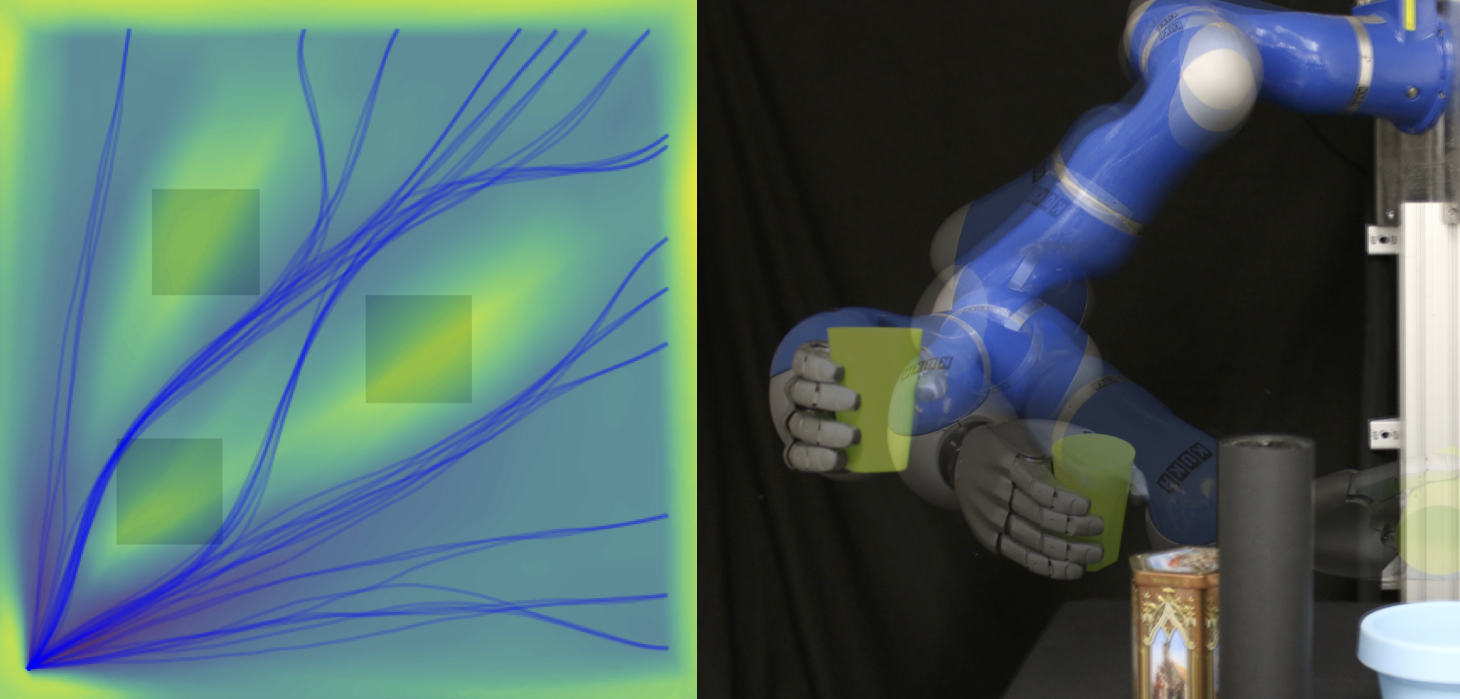

Abstract: Motion optimization is an effective framework for generating smooth and safe trajectories for robotic manipulation tasks. However, it suffers from local optima that hinder its applicability, especially for multi-objective tasks. In this paper, we study this problem in light of the integration of Energy-Based Models (EBM) as guiding priors in motion optimization. EBMs are probabilistic models with unnormalized energy functions that represent expressive multimodal distributions. Due to their implicit nature, EBMs can easily be integrated as data-driven factors or initial sampling distributions in the motion optimization problem. This work presents a set of necessary modeling and algorithmic choices to effectively learn and integrate EBMs into motion optimization. We investigate the benefit of smoothness regularization in the learning process that benefits gradient-based optimizers. Moreover, we present a set of EBM architectures for learning generalizable distributions over trajectories that are important for the subsequent deployment of EBMs. We provide extensive empirical results in a set of representative tasks against competitive baselines that demonstrate the superiority of EBMs as priors in motion optimization scaling up to 7-dof robot pouring that can be easily transferred to the real robotic system.

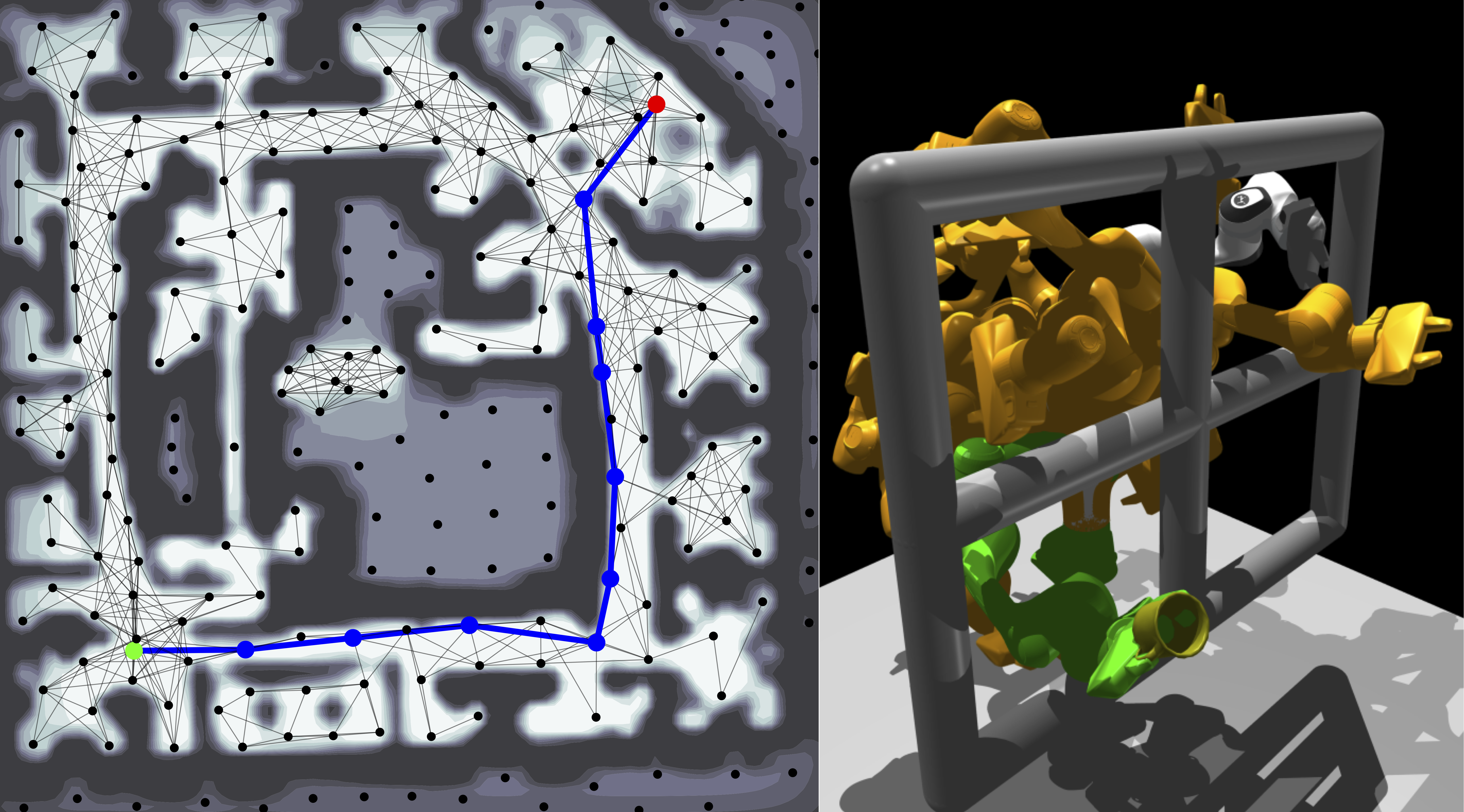

Stein Variational Probabilistic Roadmaps

Stein Variational Probabilistic Roadmaps

IEEE International Conference on Robotics and Automation (ICRA), 2022

A. Lambert, B. Hou, R. Scalise, S. Srinivasa, B. Boots

[pdf] [video] [website]

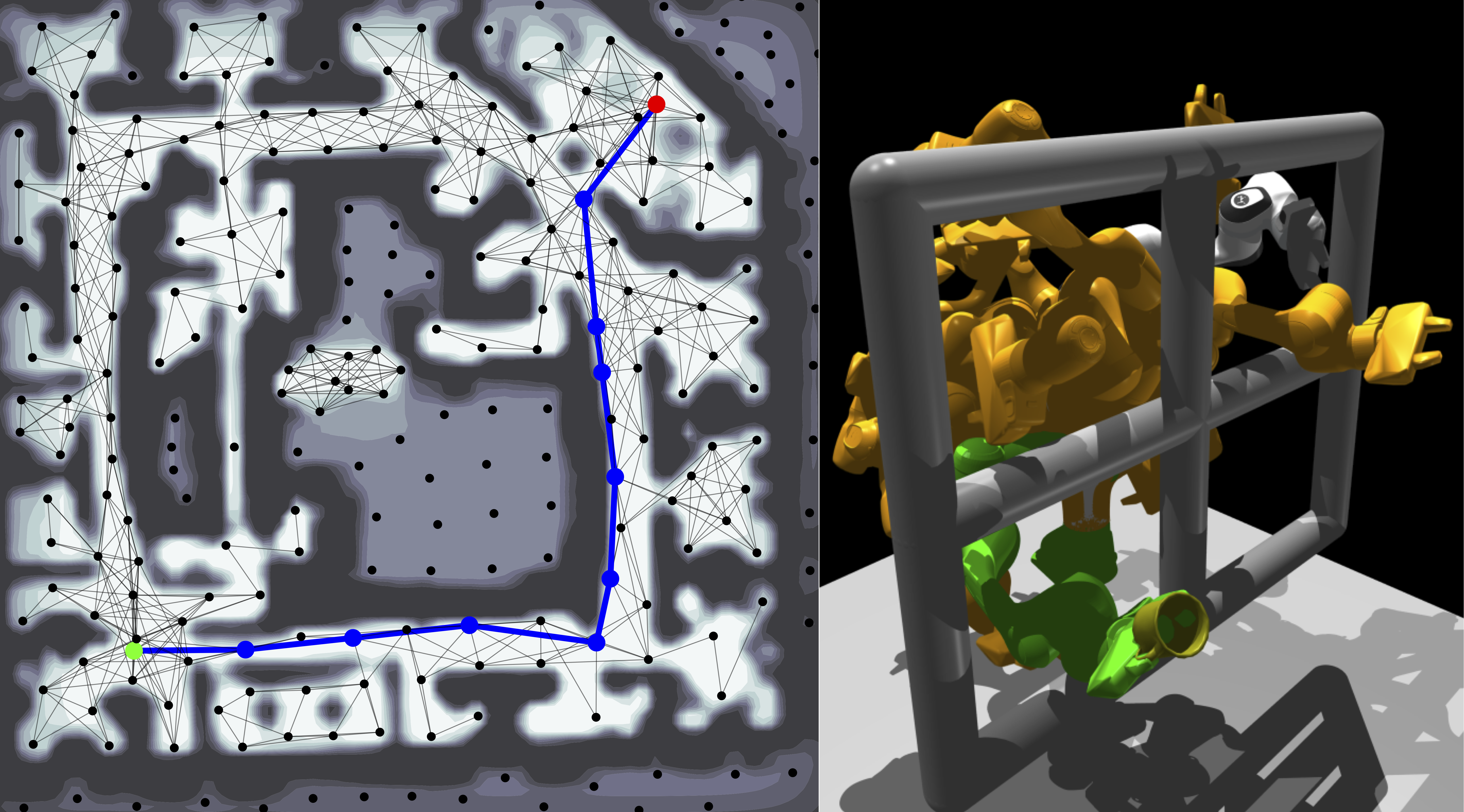

Abstract: Efficient and reliable generation of global path plans are necessary for safe execution and deployment of autonomous systems. In order to generate planning graphs which adequately resolve the topology of a given environment, many sampling-based motion planners resort to coarse, heuristically-driven strategies which often fail to generalize to new and varied surroundings. Further, many of these approaches are not designed to contend with partial-observability. We posit that such uncertainty in environment geometry can, in fact, help drive the sampling process in generating feasible, and probabilistically-safe planning graphs. We propose a method for Probabilistic Roadmaps which relies on particle-based Variational Inference to efficiently cover the posterior distribution over feasible regions in configuration space. Our approach, Stein Variational Probabilistic Roadmap (SV-PRM), results in sample-efficient generation of planning-graphs and large improvements over traditional sampling approaches. We demonstrate the approach on a variety of challenging planning problems, including real-world probabilistic occupancy maps and high-dof manipulation problems common in robotics.

Entropy Regularized Motion Planning via Stein Variational Inference

Entropy Regularized Motion Planning via Stein Variational Inference

RSS 2021 Workshop on Integrating Planning and Learning

A. Lambert, B. Boots

[pdf] [video]

Abstract: Many Imitation and Reinforcement Learning approaches rely on the availability of expert-generated demonstrations for learning policies or value functions from data. Obtaining a reliable distribution of trajectories from motion planners is non-trivial, since it must broadly cover the space of states likely to be encountered during execution while also satisfying task-based constraints. We propose a sampling strategy based on variational inference to generate distributions of feasible, low-cost trajectories for high-dof motion planning tasks. This includes a distributed, particle-based motion planning algorithm which leverages a structured graphical representations for inference over multi-modal posterior distributions. We also make explicit connections to both approximate inference for trajectory optimization and entropy-regularized reinforcement learning.

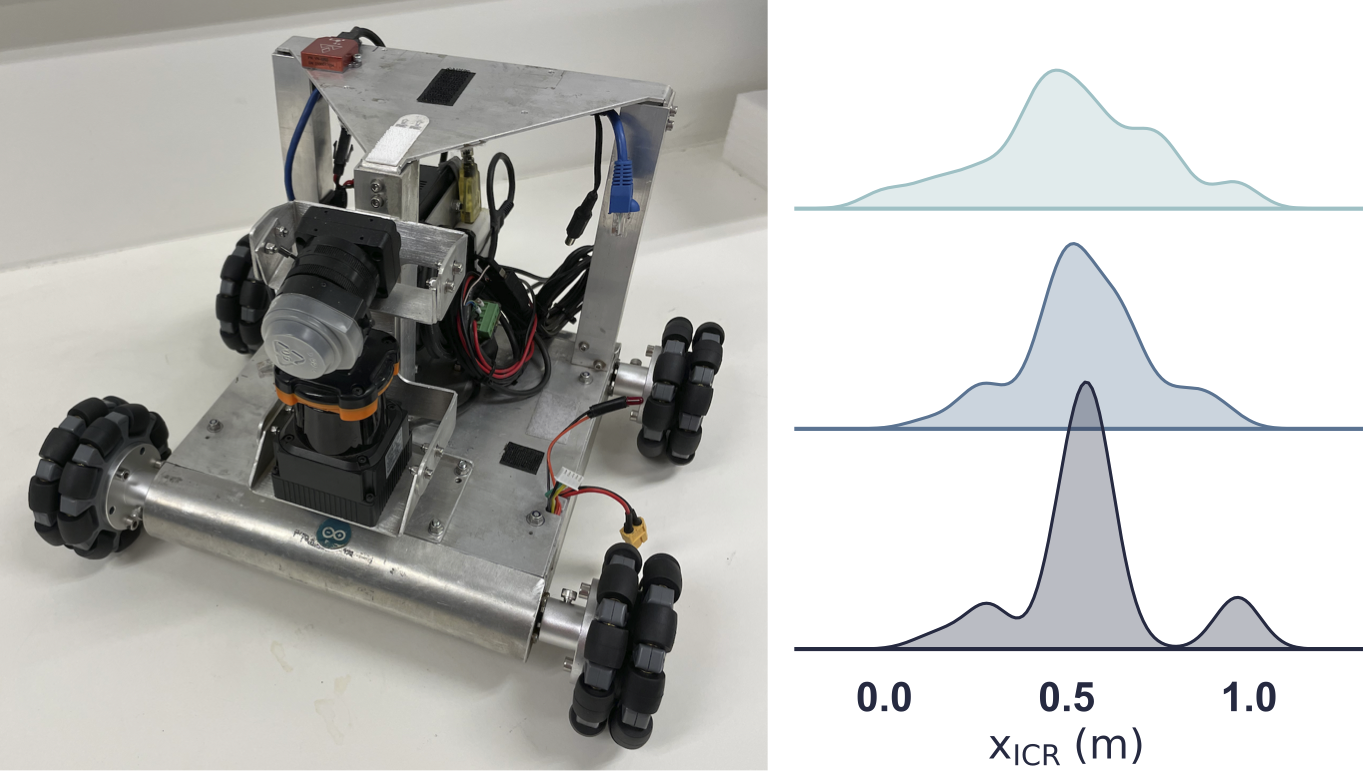

Dual Online Stein Variational Inference for Control and Dynamics

Dual Online Stein Variational Inference for Control and Dynamics

Robotics: Science of Systems (RSS), 2021

L. Barcelos, A. Lambert, R. Oliveira, P. Borges, B. Boots, and F. Ramos

[pdf] [video]

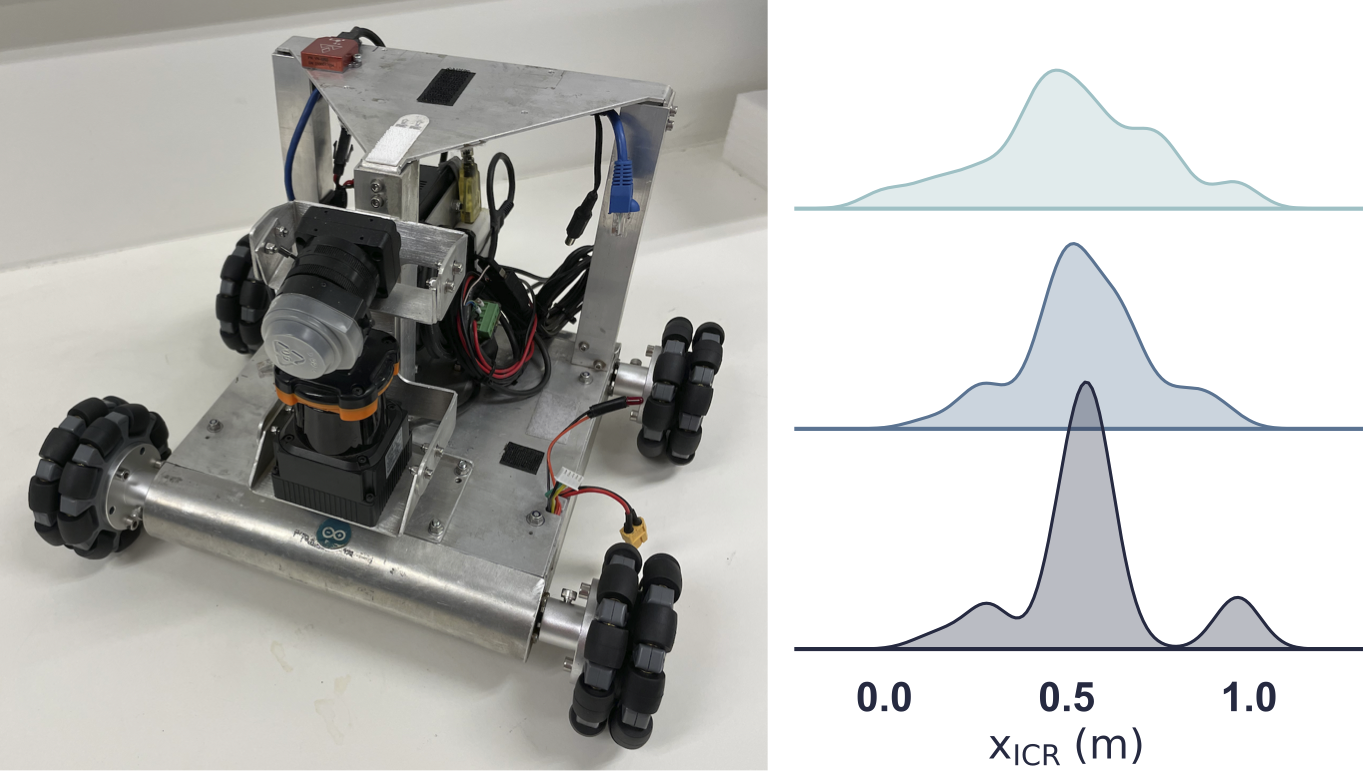

Abstract: Model predictive control (MPC) schemes have a proven track record for delivering aggressive and robust performance in many challenging control tasks, coping with nonlinear system dynamics, constraints, and observational noise. Despite their success, these methods often rely on simple control distributions, which can limit their performance in highly uncertain and complex environments. MPC frameworks must be able to accommodate changing distributions over system parameters, based on the most recent measurements. In this paper, we devise an implicit variational inference algorithm able to estimate distributions over model parameters and control inputs on-the-fly. The method incorporates Stein Variational gradient descent to approximate the target distributions as a collection of particles, and performs updates based on a Bayesian formulation. This enables the approximation of complex multi-modal posterior distributions, typically occurring in challenging and realistic robot navigation tasks. We demonstrate our approach on both simulated and real-world experiments requiring real-time execution in the face of dynamically changing environments.

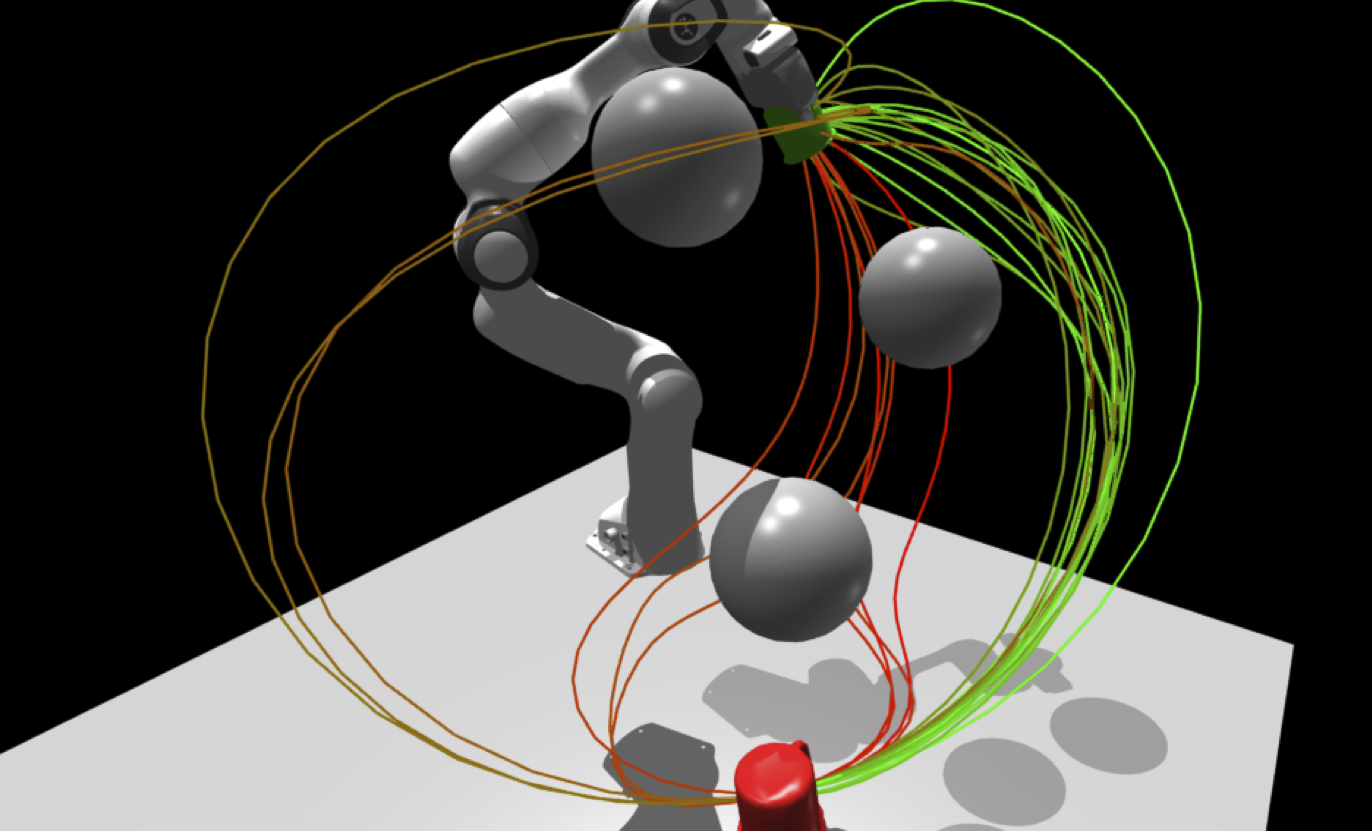

Stein Variational Model Predictive Control

Stein Variational Model Predictive Control

Conference on Robot Learning (CoRL), 2020

A. Lambert, A. Fishman, D. Fox, B. Boots and F. Ramos

[pdf] [video]

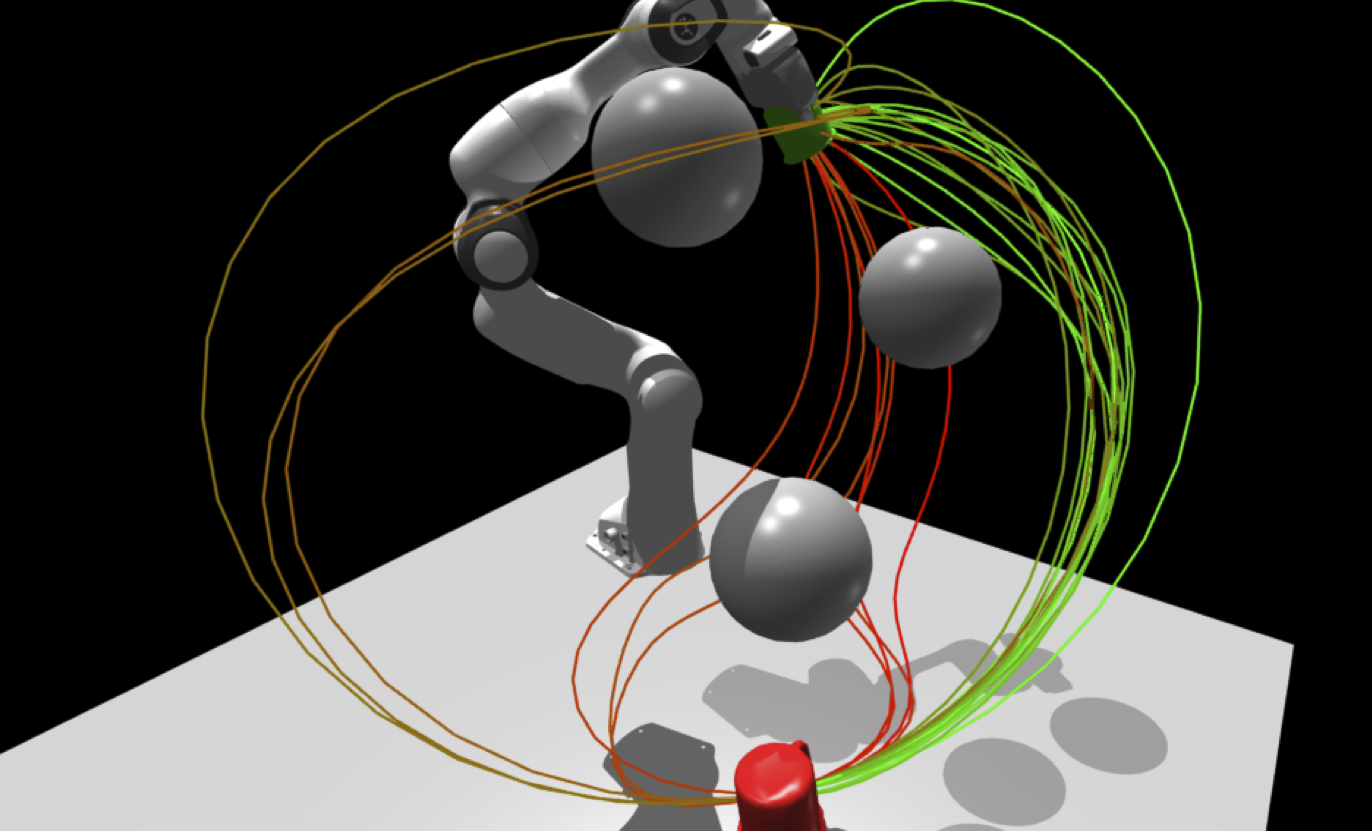

Abstract: Decision making under uncertainty is critical to real-world, autonomous systems. Model Predictive Control (MPC) methods have demonstrated favorable performance in practice, but remain limited when dealing with complex probability distributions. In this paper, we propose a generalization of MPC that represents a multitude of solutions as posterior distributions. By casting MPC as a Bayesian inference problem, we employ variational methods for posterior computation, naturally encoding the complexity and multi-modality of the decision making problem. We present a Stein variational gradient descent method to estimate the posterior directly over control parameters, given a cost function and observed state trajectories. We show that this framework leads to successful planning in challenging, non-convex optimal control problems.

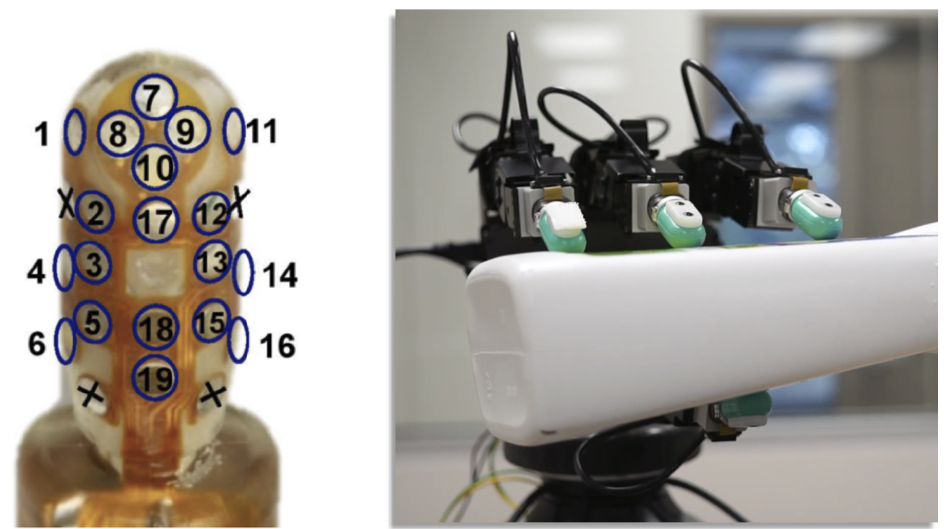

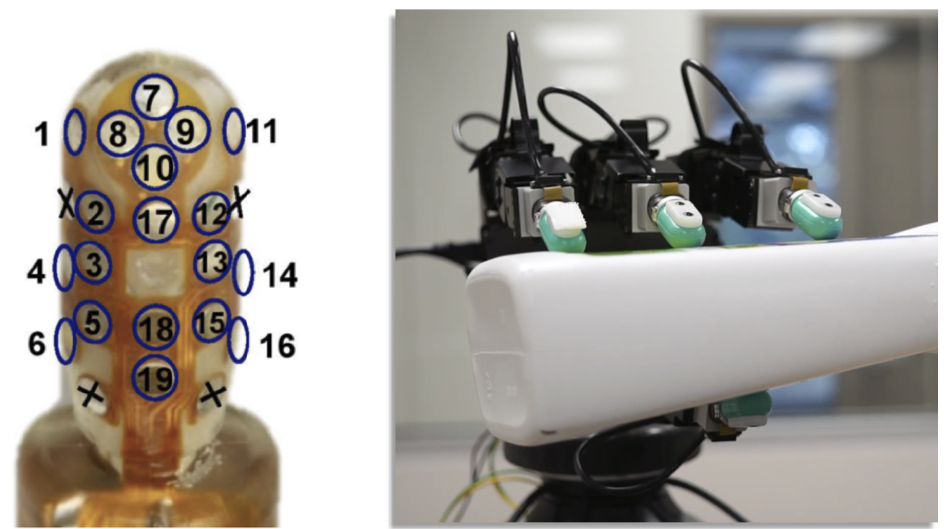

Robust Learning of Tactile Force Estimation through Robot Interaction

Robust Learning of Tactile Force Estimation through Robot Interaction

IEEE International Conference on Robotics and Automation (ICRA), 2019

B. Sundaralingam, A. Lambert, A. Handa, B. Boots, T. Hermans, S. Birchfield, N. Ratliff and D. Fox

[pdf] [video]

(Finalist for Best Manipulation Paper)

Abstract: Current methods for estimating force from tactile sensor signals are either inaccurate analytic models or task-specific learned models. In this paper, we explore learning a robust model that maps tactile sensor signals to force. We specifically explore learning a mapping for the SynTouch BioTac sensor via neural networks. We propose a voxelized input feature layer for spatial signals and leverage information about the sensor surface to regularize the loss function. To learn a robust tactile force model that transfers across tasks, we generate ground truth data from three different sources: (1) the BioTac rigidly mounted to a force torque (FT) sensor, (2) a robot interacting with a ball rigidly attached to the same FT sensor, and (3) through force inference on a planar pushing task by formalizing the mechanics as a system of particles and optimizing over the object motion. A total of 140k samples were collected from the three sources. We achieve a median angular accuracy of 3.5 degrees in predicting force direction (66% improvement over the current state of the art) and a median magnitude accuracy of 0.06N (93% improvement) on a test dataset. Additionally, we evaluate the learned force model in a force feedback grasp controller performing object lifting and gentle placement.

Joint Inference of Kinematic and Force Trajectories with Visuo-Tactile Sensing

Joint Inference of Kinematic and Force Trajectories with Visuo-Tactile Sensing

IEEE International Conference on Robotics and Automation (ICRA), 2019

A. Lambert, M. Mukadam, B. Sundaralingam, N. Ratliff, B. Boots and D. Fox

[pdf] [video] [poster]

Abstract: To perform complex tasks, robots must be able to interact with and manipulate their surroundings. One of the key challenges in accomplishing this is robust state estimation during physical interactions, where the state involves not only the robot and the object being manipulated, but also the state of the contact itself.In this work, within the context of planar pushing, we extend previous inference-based approaches to state estimation in several ways. We estimate the robot, object, and the contact state on multiple manipulation platforms configured with a vision-based articulated model tracker, and either a biomimetic tactile sensor or a force-torque sensor. We show how to fuse raw measurements from the tracker and tactile sensors to jointly estimate the trajectory of the kinematic states and the forces in the system via probabilistic inference on factor graphs, in both batch and incremental settings. We perform several benchmarks with our framework and show how performance is affected by incorporating various geometric and physics based constraints, occluding vision sensors, or injecting noise in tactile sensors. We also compare with prior work on multiple datasets and demonstrate that our approach can effectively optimize over multi-modal sensor data and reduce uncertainty to find better state estimates.

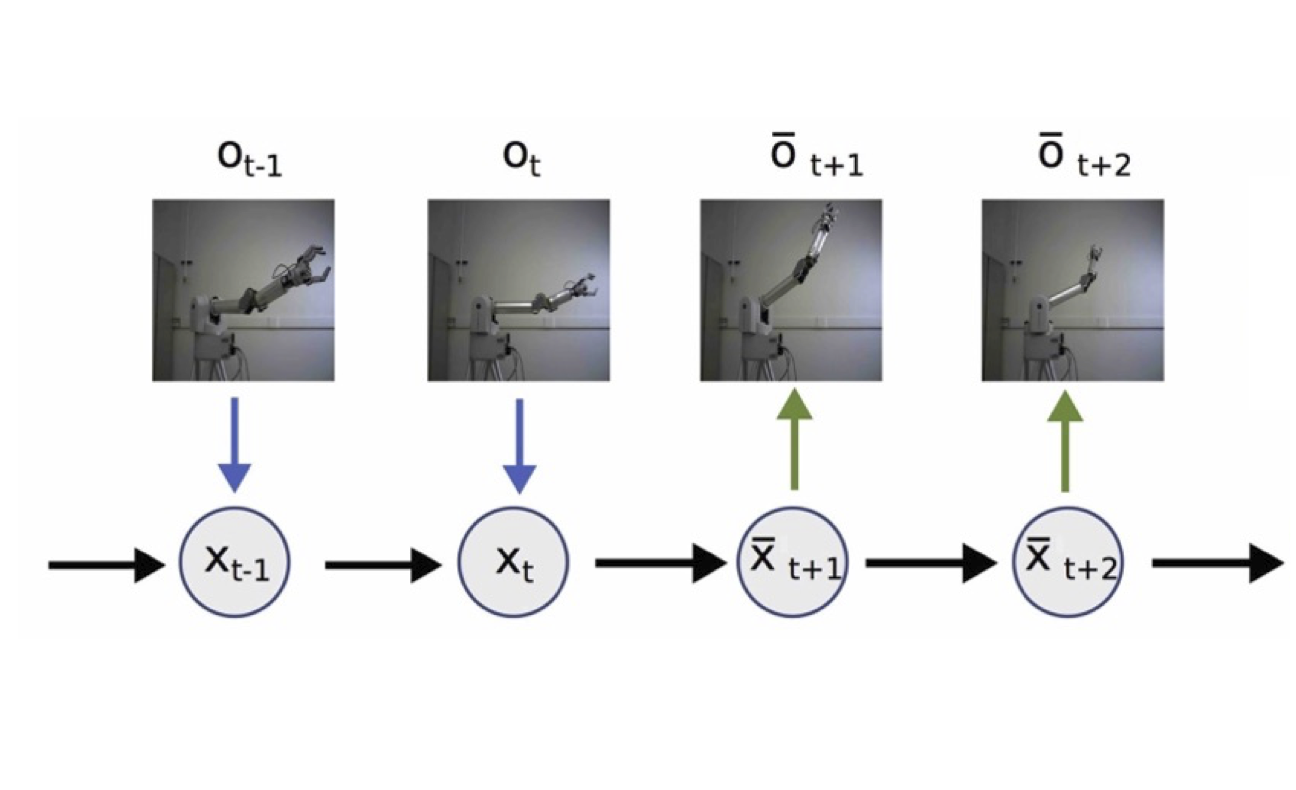

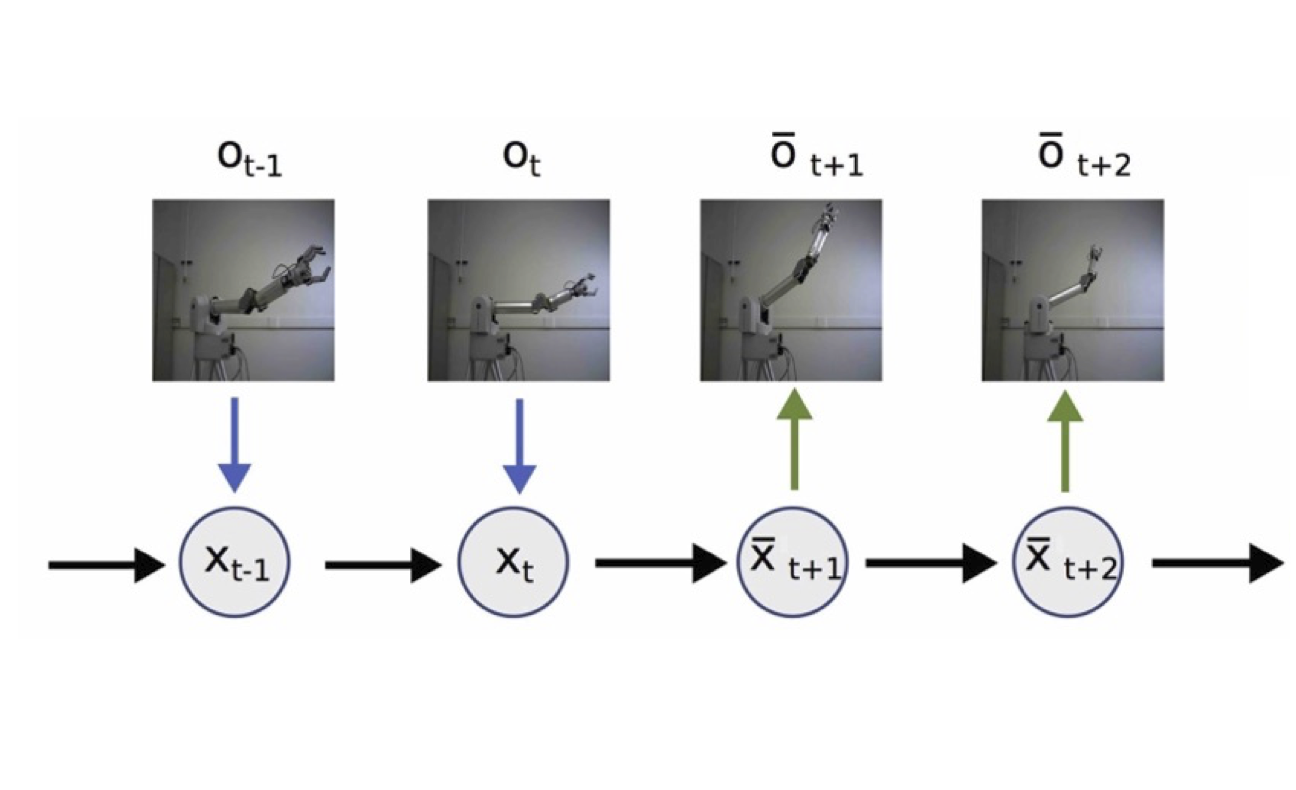

Deep Forward and Inverse Perceptual Models for Tracking and Prediction

Deep Forward and Inverse Perceptual Models for Tracking and Prediction

IEEE International Conference on Robotics and Automation (ICRA), 2018

A. Lambert, A. Shaban, A. Raj, Z. Liu and B. Boots

[pdf] [video] [poster]

Abstract: We consider the problems of learning forward models that map state to high-dimensional images and inverse models that map high-dimensional images to state in robotics tracking and prediction tasks. Specifically, we present a non-parametric perceptual model for generating video frames from state with deep networks, and provide a framework for its use in tracking and prediction tasks. We show that our proposed model greatly outperforms standard deconvolutional methods for image generation, producing clear, photo-realistic images. We also develop a convolutional neural network model for state estimation and compare the result to an Extended Kalman Filter to estimate robot trajectories. We validate all model on a real robotic system.

Past Projects

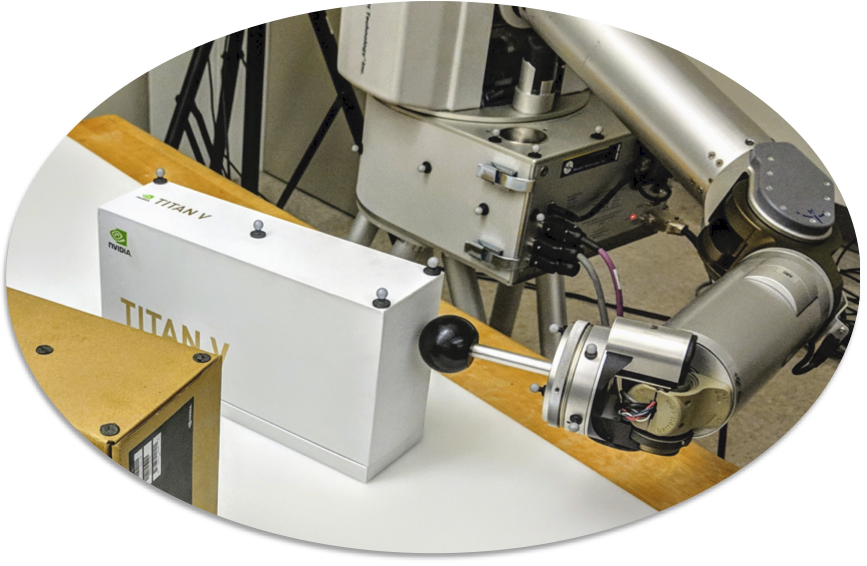

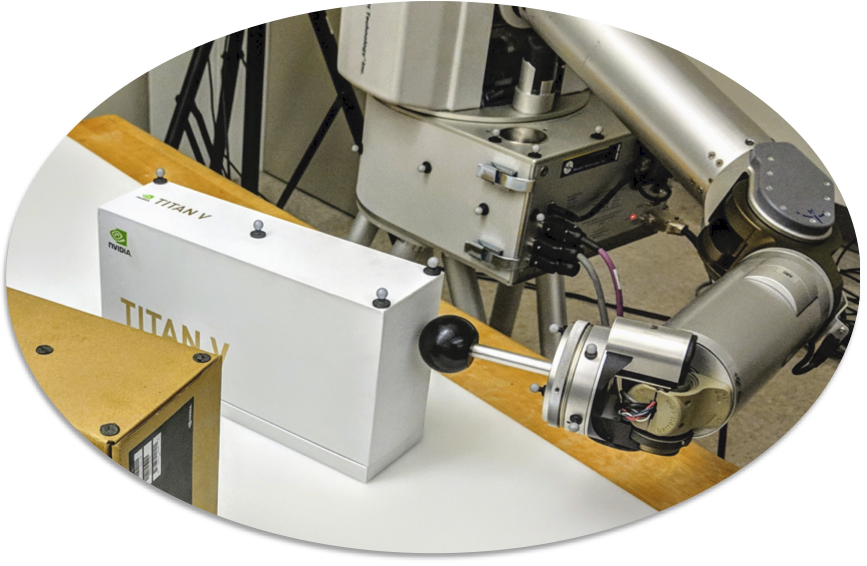

MPC for Dynamic, Underactuated Manipulation

MPC for Dynamic, Underactuated Manipulation

NVidia (Summer Internship)

[video]

As part of my internship at NVidia in 2019, I developed a sampling-based MPC controller for an underactuated swing-up task on a 7-dof manipulator. The objective was to swing an object to an arbitrary target pose without self-collision and as fast as possible (making movement under quasi-static assumptions too slow and sub-optimal). The final approach incorporated time-correlated sampling priors and goal-directed adaptation of MPC parameters, resulting in improved performance over a Model Predictive Path Integral (MPPI) baseline formulation. The resulting controller was able to successfully complete the task for arbitrary goal poses, while performing online optimization over the sampling distribution. The controller was implemented in PyTorch, and experiments were conducted using the Mujoco simulator.

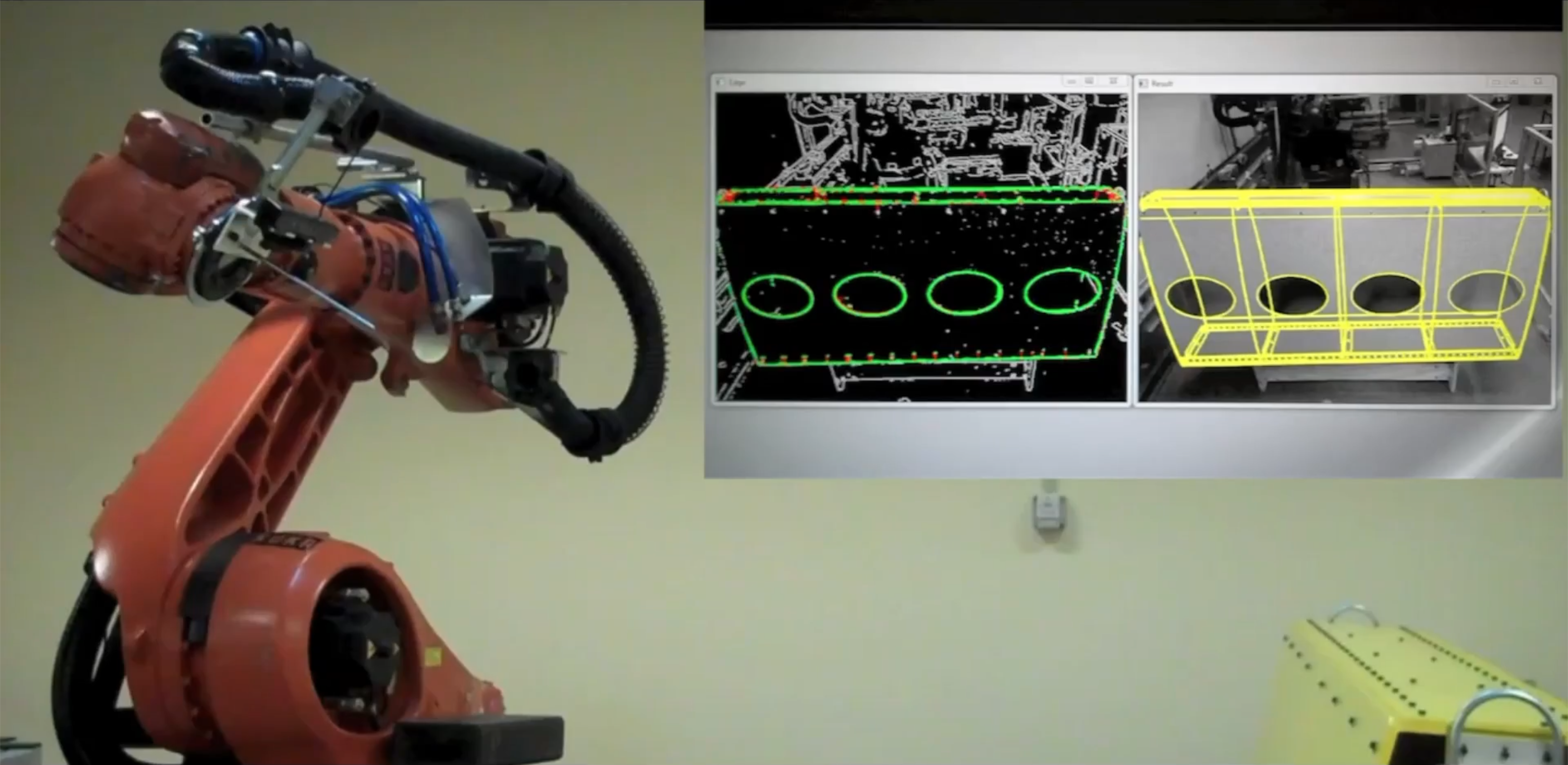

Vision-based Control for Industrial Manipulators

Vision-based Control for Industrial Manipulators

Georgia Tech

[video]

The Aerospace industry is investing heavily in intelligent manufacturing automation to increase production agility. We developed a vision-guided control system for fixtureless wing assembly drilling and inspection using different robot manipulators (UR5, Kuka KR210, KR500). The system consisted of a model-based object tracker for global part localization, and eye-in-hand high-resolution feature-tracking for accurate visual servoing.

TerrainNet: Visual Modeling of Complex Terrains for High-speed, Off-road Navigation

TerrainNet: Visual Modeling of Complex Terrains for High-speed, Off-road Navigation

RACER: High-Speed Autonomous Off-Road Driving

RACER: High-Speed Autonomous Off-Road Driving Learning Complex Terrain Maneuvers from Demonstration

Learning Complex Terrain Maneuvers from Demonstration Learning Implicit Priors for Motion Optimization

Learning Implicit Priors for Motion Optimization  Stein Variational Probabilistic Roadmaps

Stein Variational Probabilistic Roadmaps  Entropy Regularized Motion Planning via Stein Variational Inference

Entropy Regularized Motion Planning via Stein Variational Inference  Dual Online Stein Variational Inference for Control and Dynamics

Dual Online Stein Variational Inference for Control and Dynamics  Stein Variational Model Predictive Control

Stein Variational Model Predictive Control  Robust Learning of Tactile Force Estimation through Robot Interaction

Robust Learning of Tactile Force Estimation through Robot Interaction  Joint Inference of Kinematic and Force Trajectories with Visuo-Tactile Sensing

Joint Inference of Kinematic and Force Trajectories with Visuo-Tactile Sensing  Deep Forward and Inverse Perceptual Models for Tracking and Prediction

Deep Forward and Inverse Perceptual Models for Tracking and Prediction  MPC for Dynamic, Underactuated Manipulation

MPC for Dynamic, Underactuated Manipulation